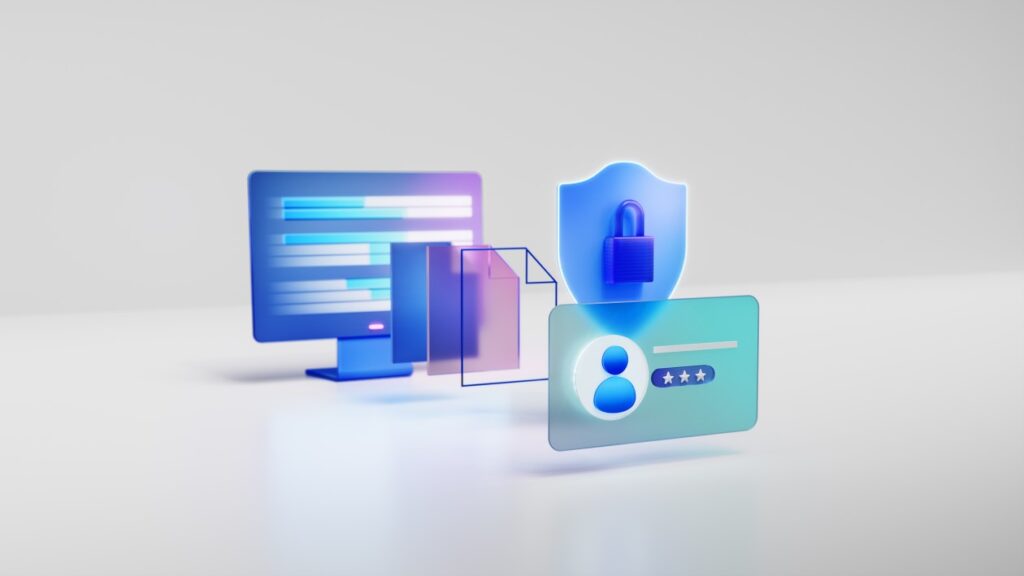

Generative AI can supercharge productivity, but only if it’s built on a secure foundation. Azure AI pairs powerful foundation models with enterprise-grade controls for encryption, access, and threat protection. It enables teams to innovate without exposing data or users to unnecessary risk. Below, we’ll cover how Azure AI secures data with encryption, how to prevent the production of harmful content, and more.

Azure AI Security with Encryption

Azure services encrypt data by default. Platform-managed keys (PMKs) are encryption keys generated, stored, and managed entirely by Azure. Customers do not interact with PMKs. Customer-managed keys (CMK), on the other hand, are keys read, created, deleted, updated, and/or administered by one or more customers.

Regulated teams can bring customer-managed keys (CMK) in Azure Key Vault to meet stricter controls and key-lifecycle requirements. This model lets you rotate, revoke, and audit keys while the Azure service performs the encrypt/decrypt operations on your behalf. Specific services expose clear setup paths for CMK. For example, Azure AI Search automatically encrypts data with Microsoft-managed keys and supports CMK for an additional layer of control (including emergency key revocation). It’s best to start with default encryption, then adopt CMK where policy or compliance demands customer control of key material.

Role-Based Access Controls (RBAC) in Azure AI

Least-privilege access is non-negotiable for AI projects that span data, prompts, and model configurations. Azure AI Foundry is a unified platform-as-a-service offering for enterprise AI operations, model builders, and application development. It provides built-in roles you can assign at the account and project levels:

- Azure AI User: Read access to accounts/projects plus data actions within a project.

- Azure AI Project Manager: Manages projects, builds and develops within them, and can assign the Azure AI User role.

- Azure AI Account Owner: Full account and project management, including creating new Foundry accounts.

It is best to treat “Project Manager” as an elevated builder (with data actions). Reserve “Account Owner” for platform admins who create/ govern accounts, not day-to-day model work. Azure OpenAI and other Azure resources also honor standard Azure RBAC patterns for fine-grained authorization. This ensures security teams can align AI projects with existing enterprise governance.

Threat Protections in Azure AI

Security doesn’t stop at encryption and RBAC. Azure adds content, application, and cloud-workload defenses that are tuned for AI:

- Prompt Shields & Safety Filters: Azure AI Content Safety allows users to build robust guardrails for generative AI, such as detecting and blocking prompt injection and unsafe content before/after generation (violence, hate, sexual, self-harm). It also mitigates security threats, prevents hallucinations, and identifies protected material. Protected material detection helps identify copyrighted or owned content in model outputs.

- Network & Data Plane Controls: Azure AI Search supports IP firewalls, private endpoints, and document-level permissions for granular data access and network isolation. This is an important tool for searches that combine different methods (like keyword matching and meaning-based search) to give large language models (LLMs) better backup and more accurate answers.

- Defender for Cloud (AI Threat Protection): Centralize alerts for AI services, correlate incidents, and hunt across identities, endpoints, and cloud resources in Microsoft Defender XDR. This tool ensures security teams see AI threats in the same dashboard they use to monitor everything else.

Video

Microsoft’s short overview shows how to choose a foundation model, enable Azure AI Content Safety, configure prompt shields, set groundedness detection, and evaluate your safety posture before you ship.

Keeping Generative AI Safe

Keeping generative AI safe can be complex. Encrypting everything by default, enforcing least-privilege with Azure RBAC, and continuously detecting, investigating, and responding to risks with Content Safety and Defender for Cloud/XDR can make this process simpler. With these controls in place, you can be confident your Azure AI deployments are protected end-to-end. If you are interested in getting started with Azure AI, contact our team of experts.